Let's build a production-ready REST API

Sep 29, 2024

Let me show you how to build a professional REST API using Python, step by step. Next week I will show you how to deploy it.

Let’s start!

You can find all the source code in this repository

Give it a star ⭐ on Github to support my work

Our goal 🎯

Let’s build a REST API that can serve data on historical taxi rides in NYC. The original data is stored in one-month parquet files on this website, and our goal is to make it easily accessible through a REST API.

Today we will build this API and dockerize it. And next week we will deploy it to production.

Let’s go!

Steps 👣

1. Generate the project structure 🏗️

IMHO the easiest way to develop a professional Python code is to use Python Poetry.

Open your command line, install Poetry with a one-liner and create a boilerplate project with

$ poetry new taxi-data-api-python --name srcThen generate the virtual environment that will encapsulate all the Python dependencies

$ cd taxi-data-api-python

$ poetry installTo keep our code modular, I recommend you create at least 2 source code files

-

api.py, with the FastAPI application that routes incoming requests, and returns responses.

-

backend.py, that downloads, reads and returns the data requested from the api.py.

The folder structure at this point should be this:

$ tree

.

├── README.md

├── poetry.lock

├── pyproject.toml

├── src

│ ├── __init__.py

│ ├── api.py

│ └── backend.py

└── tests

└── __init__.py

2. FastAPI boilerplate 🍽️

FastAPI is one of the most popular Python libraries to build REST APIs, and the one we will use today.

Add it to your project dependencies, together with all optional dependencies

$ poetry add fastapi[all]Let’s start by creating a simple health endpoint, that we can ping to check if our API is up and running.

Add the following code to api.py

# api.py

from fastapi import FastAPI

app = FastAPI()

@app.get('/health')

def health_check():

return {'status': 'healthy'}Now, go to the command line and start the server with hot reloading, so that changes to the code are automatically synced to the server when we develop.

# start the server with hot reloading and default port 8090

$ poetry run uvicorn src.api:app --reload --port $${PORT:-8090}What is uvicorn? 🦄

Uvicorn is an ASGI (Asynchronous Server Gateway Interface) server implementation, commonly used to run Python web applications, including those built with FastAPI.

While FastAPI provides the framework for building your API, Uvicorn serves as the production server that actually runs your application and handles incoming HTTP requests, making it production-ready.

Open another terminal session and test you can reach the health endpoint.

$ curl -X GET http://localhost:8090/health

{"status":"healthy"}BOOM!

3. Add a /trips endpoint to the REST API 🚕

Let’s add another GET endpoint called trips, that takes 2 parameters:

-

from_ms: timestamp from which we want to get taxi trips data, expressed as Unix milliseconds, and

-

n_results: number of max results we return, with a default value of 100.

@app.get('/trips', response_model=TripsResponse)

def get_trip(

from_ms: int = Query(..., description='Unix milliseconds'),

n_results: int = Query(100, description='Number of results to output'),

):

# ... implementation details ...and returns a custom response type with

-

the trips which is a (possibly empty) list of Trips objects.

-

the next_from_ms timestamp the user should request to fetch the next batch of data, and

-

an optional message string with human-readable errors.

from pydantic import BaseModel

class TripsResponse(BaseModel):

trips: Optional[list[Trip]] = None

next_from_ms: Optional[int] = None

message: Optional[str] = None

4. Add the backend logic 🧠

Here is the meat of your API. In our case, our get_trips(from_ms, n_results) function

-

downloads the parquet file from the external website for the given year and month

df: Optional[pd.DataFrame] = read_parquet_file(year, month) -

filters the results based on the time range and n_results

df = df[df['tpep_pickup_datetime_ms'] > from_ms] df = df.head(n_results) -

returns the response formatted as a list of Trips to the FastAPI app

# pandas dataframe to list of dicts trips = df.to_dict(orient='records') # list of dicts to list of Trips trips = [Trip(**trip) for trip in trips] return trips

Let’s now check everything workd.

5. Check the /trips endpoint works ✔️

Send a sample request to your API

$ curl -X GET "http://localhost:${PORT:-8090}/trips?from_ms=1674561748000&n_results=1"

{"trips":[{"tpep_pickup_datetime":"2023-01-24T12:02:29","tpep_dropoff_datetime":"2023-01-24T12:11:17","trip_distance":0.8,"fare_amount":9.3}],"next_from_ms":1674561749000,"message":"Success. Returned 1 trips."}%BOOM! Things work.

Let’s now dockerize our API so it is ready to be deployed.

Why Docker? 🤔

Docker is the #1 most-used developer tool in the world, and do you know why?

Because it solves the #1 most-annoying issue that every Software and ML engineer faces every day.

And that is, having an application (e.g. our REST API in this case) that runs perfectly on your local machine but fails to work properly when deployed to production, aka the “But it works on my machine” issue.

6. Dockerize our API 📦

The first step is to write the Dockerfile. The Dockerfile is a text file that you commit in your repo

├── Dockerfile

├── README.md

├── poetry.lock

├── pyproject.toml

├── src

│ ├── __init__.py

│ ├── api.py

│ └── backend.py

└── tests

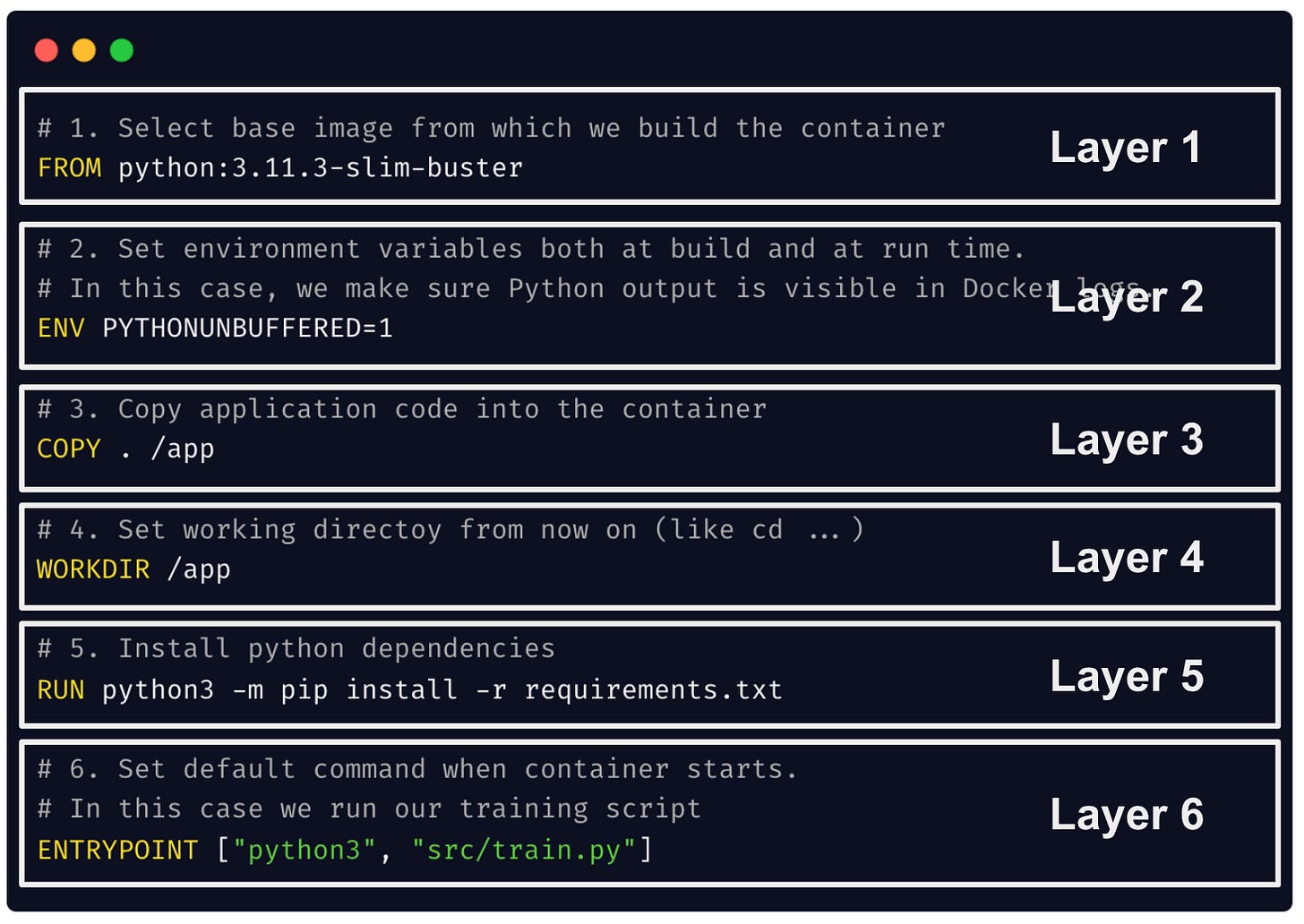

└── __init__.pywith a layered set of instructions that tell the docker-engine how to build your environment, for example ↓

From this Dockerfile you can build your Docker image

$ docker build -t taxi-data-api-python .And from this image you can run a container, that exposes the internal port 8000 to port 8090 on your localhost.

$ docker run -p 8090:8000 taxi-data-api-pythonFinally, you can check again everything works with another curl, or simply by visiting this URL from whatever internet explorer you use (e.g. Chrome)

BOOM!

Tip 🎁 → Makefiles to the rescue!

I recommend you create a Makefile to streamline your workflow. In this file you define a set of common command you constantly run when developing your service, for example

-

To start your fully Dockerized API locally you do

$ make run -

And to test it works you do

$ make sample-request

What’s next? 🔜

Next week I will show you how to deploy this API and make it accessible to the whole world. So you escape from the localhost hell, and start shipping ML software that others can use.

Talk to you next week,

Peace and Love

Pau